I have two personal mottoes:

The first one is from a Talking Heads song, and the second one is the title of this blog because it applies to a lot of the most interesting questions:

- Is the world simple or complicated?

- Are we the product of our genes or our environment?

- Are we more different from one another or more the same?

- Is the good of a group more important than its members?

- Should tradition take precedence over change?

- Is justice superior to mercy?

- Is it better to have things or do things?

- Is work more important than play?

- Should reason rule over emotion?

- Do generations shape history or are they shaped by history?

- Is mathematics discovered or invented?

Many of the posts and favorite texts here were prompted by a book I’d read recently or been reminded of, most likely non-fiction—the history of science, crime investigations, important ideas. It helps me organize my thoughts and let the lessons sink in. For me, posting things here is like exercise, which is to say I consider it good for me whether anyone else notices or not.

In addition to reading books and writing this blog, I wrote a book about the former mayor of my small city because I believed his story was so compelling it deserved to be told. You can buy it here.

A lot of the other content here—linked from the items in the header—has migrated over from my previous personal sites. I brought it along because there’s a lot of me in those words, pictures and code. And I realize now, there always will be.

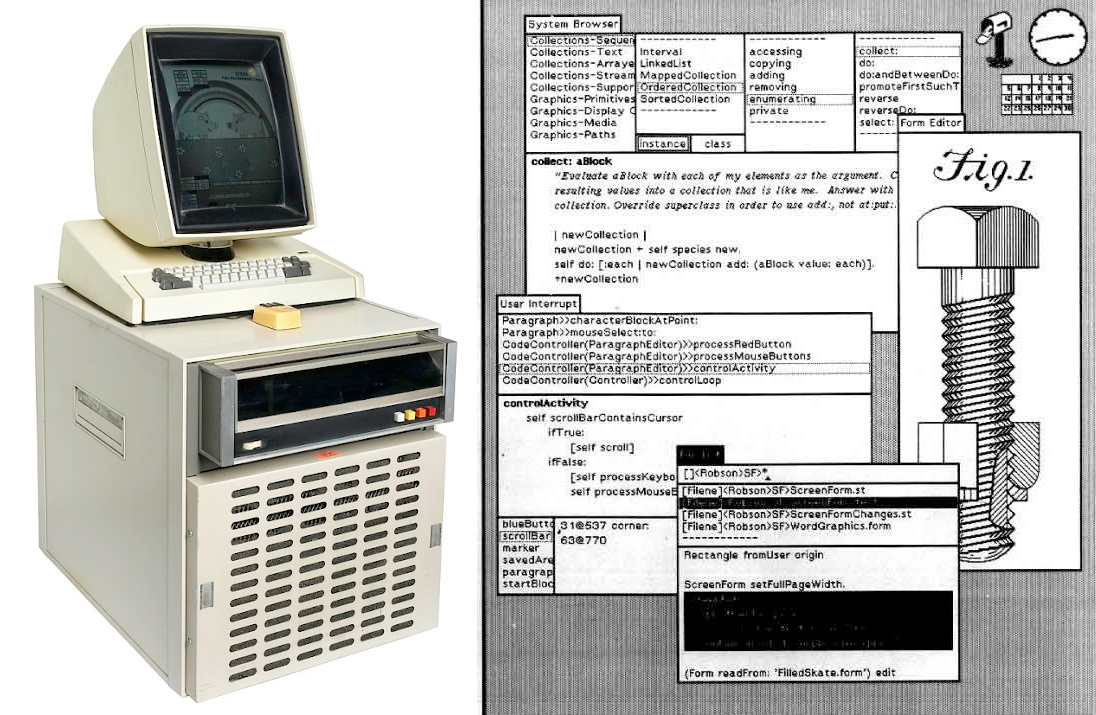

In the early days of the World Wide Web, designing and creating a personal web site was a bit like building your own house on the prairie, as opposed to just moving into an established neighborhood, as is more often the case now. I began creating my first web site at a free hosting provider in 1996 as a hobby and just for fun, but many of the skills I learned in the process—HTML, CSS, Javascript, digital image formats—worked their way over to my career and helped me make a living. Like any creative expression, a big part of building your own web site was deciding what it would be about: What do I have some knowledge of? What can I contribute?

Around that time, I attended the Canadian Grand Prix several years in a row, so Formula One racing became my site’s initial primary topic. I posted photographs and descriptions of my trip to Montreal each year, and I also set out to catalog the history of all the Formula One races in the United States, including the ones I’d been to in the 1970s. I bought books and made regular trips to the library for race accounts going back to the 1950s in Road & Track magazine. I thought it was pretty cool that in the first few months, Yahoo! created a category just for me, and I received email comments from Formula One fans in 17 countries who visited my site. One guy cursed me in his message for all the time he’d spent reading the information there.

At that time, as the web was just beginning to be configured, explored and staked out, it was intoxicating to plant something in that fertile ground. It was a new forum for untamed organic expression, whether all you knew was the <p> tag or you could write Javascript and Perl code. The server wasn’t mine, but the code, text and images were, and on that platform—unlike any previous medium—they could be viewed instantly by anyone anywhere in the world. Those personal sites were like plots in a community garden planted and tended for their own sake.

Tim Berners-Lee, creator of the World Wide Web, said that at the beginning, “The spirit there was very decentralized. The individual was incredibly empowered. It was all based on there being no central authority that you had to go to to ask permission.”

“The vision,” said Louis Menand of The New Yorker, “was of the Web as a bottom-up phenomenon, with no bosses, and no rewards other than the satisfaction of participating in successful innovation.”

Alas, now—instead of doing our own chaotic designing, building and growing or adding something to the “sum of human knowledge”—we mostly just offer up our personal information to mammoth sites that exist for their own benefit and wonder how they were able to take advantage of us.

As Katrina Brooker said, “The power of the Web wasn’t taken or stolen. We, collectively, by the billions, gave it away with every signed user agreement and intimate moment shared with technology. Facebook, Google, and Amazon now monopolize almost everything that happens online, from what we buy to the news we read to who we like. Along with a handful of powerful government agencies, they are able to monitor, manipulate, and spy in once unimaginable ways.”